Justice Connect’s six AI principles: A framework for ethical AI for access to justice

30 Jul 2025

At Justice Connect, we’ve always been brave about doing things differently.

In response to rising, more complex legal need, we leaned into digital innovation because we believe in the power of technology to improve how people navigate the legal system.

For legal services under pressure, it’s an ongoing challenge to quickly and fairly work out who needs what kind of help, and how they can access it. Every day, people come to us stressed and overwhelmed. The legal system is complex, and when you’re in a crisis, complexity feels like a wall.

Our award-winning AI model identifies a person’s legal issue based on how they describe it, and guides them to the right legal resources or services. Trained on a large dataset of de-identified legal problem descriptions, and verified by lawyers, our AI model helps match people more accurately with the right legal support.

Although artificial intelligence has been making headlines for years, and public debate is growing, the regulatory landscape is yet to fully cover how organisations can act ethically and safely when it comes to AI. As such, there is immediate need for leadership on these issues from a social justice perspective.

In developing and designing our own AI systems to improve access to justice, we knew we must have systems in place to mitigate the harms associated with AI and demonstrate its capacity to be deployed ethically and for the public good.

Recognising this need, we developed six AI ethics principles that we embody in our AI work. Aligned with the NSW Government and Australian Government’s AI Ethics Principles, the framework serves as a foundation for our AI governance best practice, which continues to adapt as the regulatory landscape matures.

The framework was made both to guide our own work, and the broader sector as AI becomes more integrated in the access to justice sector.

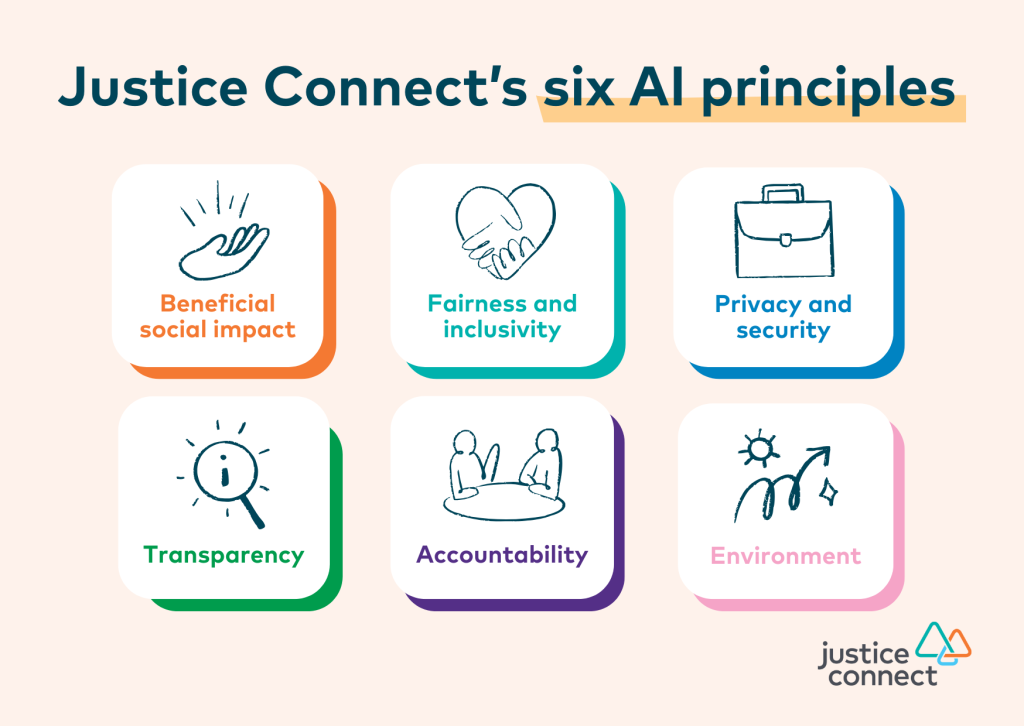

Justice Connect’s six AI ethics principles

1. Beneficial social impact

We make sure our use of AI delivers a beneficial social impact that is better than what would be the case if the AI model were not in use.

This requires us to consider the impacts of an AI system at different scales, including the individual, community, society, and both at a national and (where relevant) international level.

By integrating our AI model into our Intake Tool, we’re seeing real benefits. People who use our AI-supported tools are reaching the right information and support faster. There’s no need-to-know legal terminology – just describe your own issue in your own words, and the system does the work.

The AI also helps legal services by doing the time-consuming work of sorting and directing requests. It reduces delays by sending matters to the right place, fast. It improves referrals by basing decisions on accurate, consistent issue identification. And it helps connect people to the most appropriate services – those with the expertise or mandate to help.

2. Fairness and inclusivity

Fairness and inclusivity are key considerations when building our AI systems. This means accounting for the inherent biases that come with AI, and taking active measures to mitigate these biases to ensure it can be used by everyone.

Technology does not always perform equally for all members of our community, and often carries the biases of the people that build, use, and maintain it.

Throughout the development of our AI model, we identified underperformance issues in accurately identifying legal issues when they are described by older people. To actively address this, we worked with community and government organisations to collect an additional 12,000 language samples to retrain the model, including 2,000 specifically from older people.

Since integrating the re-trained model into our Intake Tool in December 2023, performance for older people has improved by 8%.

But we also observed that when we worked to improve the performance for older people, we also improve the performance or everyone. We are committed to continuing to explore opportunities to improve the model’s fairness, and make sure it can be useful and accessible for all members of the community.

3. Privacy and security

Justice Connect’s AI projects include the highest levels of privacy and security assurance, building our commitment to privacy set out in our privacy policy.

In this way, Justice Connect is committed to maintaining the human right of privacy, protecting the interests of the community, and building public confidence in AI as a tool to support social justice.

A few ways we ensure our AI systems operate according to the principles of privacy and security is by:

- Ensuring cyber security risks are appropriately managed.

- Providing sufficient information on how data from users will be used to ensure informed consent.

- Maintaining accurate records of data sets.

- Consistently ensuring that all training data is anonymised to protect client privacy.

4. Transparency

Justice Connect’s use of AI is transparent to users and the broader community. Transparency means ensuring that our AI systems are legible and understandable to the organisation, users, stakeholders, and the public at large.

One measure we have taken to build transparency around our use of AI is producing an animated video on our AI model, including helpful tips on how users can spot safe, ethical AI. To ensure it was seen widely among the communities we serve, we ran targeted online outreach to drive engagement. Since its release in May 2024, the video has been viewed over 119,000 times.

5. Accountability

Accountability means that decision making remains the responsibility of Justice Connect, and ensuring that all AI-based decisions within the organisation will be subject to human review and intervention.

To train the model, we built our Training AI Game (TAG), a tool where lawyers from Justice Connect’s pro bono network highlight key legal phrases in real examples. More than 310,000 snippets have been highlighted so far, and serves as a great example of how the sector can work together to improve digital tools in a hands-on, meaningful way.

Our newest training tool, Consensus, lets legal experts collaboratively test how consistently legal problems are categorised – keeping human judgement at the heart of the process.

6. Environment

Justice Connect is a leading provider of pro bono legal services to people and community organisations impacted by disasters fuelled by climate change. AI will assist Justice Connect in providing more targeted and appropriate assistance for these matters. However, the increasing use of AI systems and associated energy and resource requirements is exacerbating the climate crisis, particularly with the emergence of generative AI models. The inclusion of this principle ensures Justice Connect’s AI guidelines align with its existing work in responding to the climate crisis.

Technology, like any intervention, works best when it is deployed to augment and support the human experience of finding the right legal help.

Through these guidelines, Justice Connect’s AI projects continue to be guided by principles of justice, fairness, and putting the community at the centre of all we do.

If your organisation would like to learn more or explore ways to get involved, please get in touch with Justice Connect’s Innovation team.