We’re building cutting edge AI to identify legal problems

19 Jul 2021

Justice Connect is developing cutting edge artificial intelligence (AI) to help overcome critical barriers between help-seekers and legal help providers in Australia.

As demand for legal help grows each year, legal organisations are building online resources and digital strategies to increase service reach, scale, accessibility and efficiency. However, there are barriers to these strategies succeeding, in particular, connecting people with relevant resources and services without the need for lawyers to manually determine the legal problem, further delaying their capacity to provide suitable help.

Justice Connect, alongside our funding partners and AI experts at the University of Melbourne School of Computing Science, are poised to crack this challenge.

Across 2020 and early 2021, we have been working to produce a proof of concept natural language processing AI model using annotated language samples generated through our AI project.

With seed funding initially provided by the Victorian Legal Services Board, the project has now received a welcome funding boost through the NSW Access to Justice Innovation Fund. The new funding will support building out the AI model to increase its inclusivity and performance, with specific efforts to collect diverse language samples across NSW.

The case for diagnostic AI in the legal assistance sector

Many people who recognise their problem has a legal dimension do not know the technical legal terminology around their issue. Through research with hundreds of help-seekers we found 41% of Justice Connect online users identified as unable to categorise their legal issue. We also found evidence of very high inaccuracy rates amongst those believing they applied a correct category.

In the words of our users, when asked why they could not identify their legal problem category: “It’s complex and I’m not an expert!” and “[I’m unsure of the category] because of the family relationship [issue] together with financial issue.”

The struggle to categorise and name legal problems causes particular issues in online settings when people search via search engines, websites and directories for legal services without the right search terms and technical language. They can struggle to find relevant resources and services and can end up more confused than when they started their search for answers.

While acute in online settings, the challenge of identifying legal problem categories also impacts legal services in offline settings, in particular in intake and triage processes where volunteers can face difficulty efficiently and accurately triaging people seeking legal assistance. This then further complicates and delays people’s access to timely legal assistance.

Natural language processing AI offers huge potential to assist in helping diagnose legal issues and empowering people with the relevant language to confidently describe their problem and find the right legal services and resources quickly and easily.

Pro bono powered AI

Having launched an online intake system in 2018, we now have thousands of samples of natural language text describing legal problems.

A key challenge in natural language based AI projects is generating a training data set. In our project, we need legal professionals to annotate language samples – a potentially expensive exercise. However we have partnered with pro bono lawyers to achieve expert annotation at huge volumes.

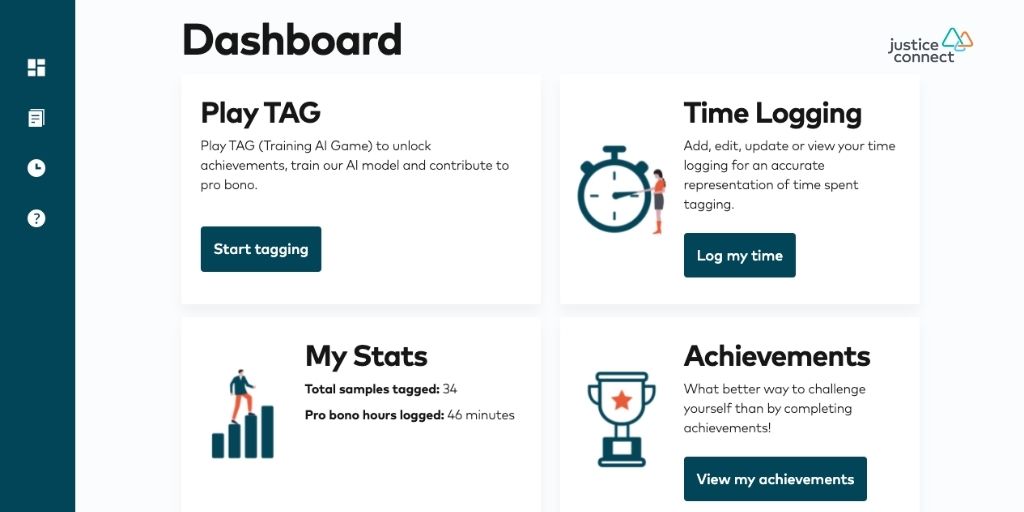

Our in-house innovation team built a Training AI Game (TAG) that presents language samples to participating pro bono lawyers and requires them to annotate the samples in several ways. The samples can then be exported in an annotated format and provided to the University of Melbourne team helping to train the AI model.

We meticulously deidentify and anonymise the language samples prior to adding them to the game and each sample is reviewed multiple times to ensure accuracy.

Screenshot of our in-house Training AI Game (TAG)

Progress in Numbers

- Lawyers onboarded: 235

- Number of annotations applied by lawyers via our TAG game: 59,991

- Annotated samples (each original sample is counted up to 5 times as each sample is annotated by 5 different lawyers): 19,231

- Top 3 area of law tags applied:

- Litigation and dispute resolution

- Employees and volunteers

- Housing and residential tenancies

The inclusivity challenge

Natively-trained text classification models are often problematically biased, performing substantially worse for under-represented or socially disadvantaged demographics.

Building an unbiased diagnostic AI model takes more effort, but is particularly important for the legal assistance sector because the people most at risk of experiencing an underperforming AI model are those that are in greatest need of legal help.

The latest stage of our AI project will be supported by the NSW Access to Justice Innovation Fund, to undertake community outreach and proactively capture language samples from diverse groups of people so that these can been explicitly incorporated into our model training dataset. Once we have enriched our training data, we will be able to test the model’s performance with the priority cohorts identified to ensure that the model is performing at least as well for these cohorts as it is on average.

- Read more about Justice Connect’s globally recognised digital innovation work.

- Read more about the NSW Access to Justice Innovation Fund.